What Are AI Detector Mistakes?

AI detectors are designed to identify whether text is written by a human or generated by artificial intelligence. However, AI detector mistakes occur when genuine human writing is flagged as AI-generated. These errors, known as false positives, can create challenges for students, professionals, and publishers who rely on originality.

How AI Detectors Work

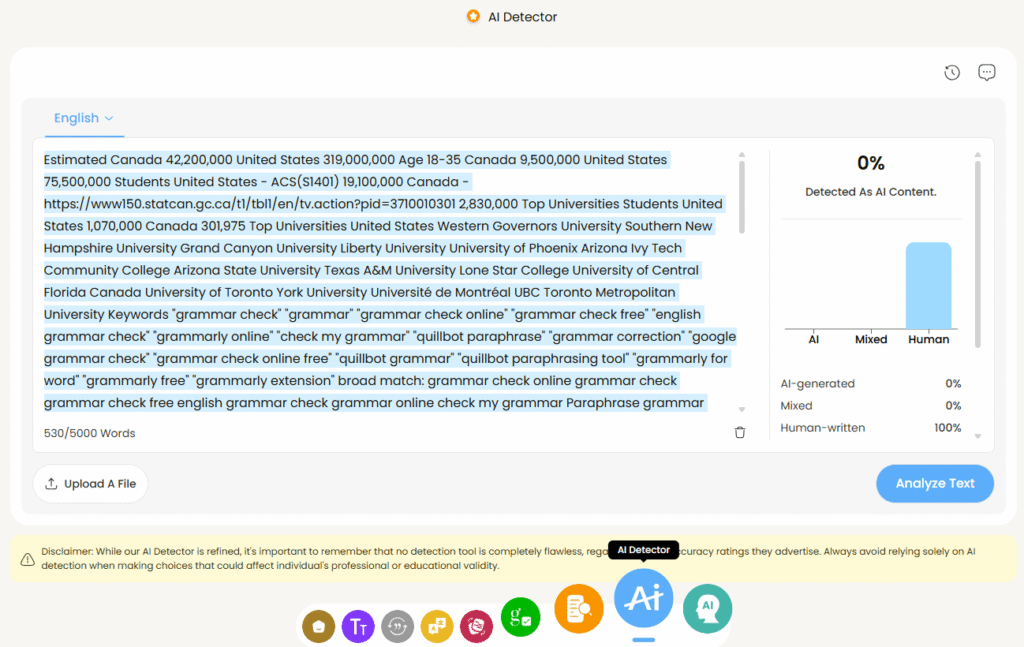

AI detectors typically analyze text patterns such as sentence structure, predictability, and word frequency. Tools like the KreativeSpace AI Detector use advanced models to estimate the likelihood that content was written by AI. While effective in many cases, even the most accurate detectors can produce errors when human writing resembles AI-generated style.

How Often Do AI Detectors Flag Human Text as AI?

Studies have shown that AI detector mistakes can occur anywhere from 5% to 20% of the time, depending on the tool and the dataset. For example, longer texts with repetitive sentence patterns are more likely to be misclassified. Short, concise writing can also trigger AI detector false positives, especially when authors use simple vocabulary.

Factors That Increase AI Detection Errors

Several factors contribute to human text being misclassified as AI:

- Simplistic writing style – Text with short, direct sentences may appear algorithmic.

- Overuse of paraphrasing tools – Students often use free paraphrasers that distort sentence flow, triggering detection systems.

- Technical or repetitive content – Articles with frequent jargon or formulaic explanations resemble AI outputs.

- Cross-language writing – Non-native English speakers sometimes produce text patterns that detectors confuse with machine writing.

For instance, a student summarizing research might unknowingly trip an AI detection error because the structure appears formulaic, even if the work is entirely original.

The Impact of AI Detector False Positives

The consequences of AI detection errors can be serious:

- Academic penalties – Students may be unfairly accused of cheating.

- Publishing challenges – Writers may face credibility issues when submitting genuine work.

- Workplace misunderstandings – Professionals could lose trust if their reports are flagged incorrectly.

This highlights why accuracy matters in evaluating AI detectors.

Reducing AI Detector Mistakes with Better Tools

Platforms like KreativeSpace focus on reducing false positives through advanced modeling and multiple detection layers. By combining AI detection with tools such as the Paraphraser, Plagiarism Checker, and AI Humanizer, users can refine their writing while minimizing risks of misclassification.

For a balanced workflow, KreativeSpace encourages students and professionals to:

- Check drafts with the AI Detector before submission

- Use the Grammar Checker to avoid errors that mimic AI style

- Run text through the Humanizer to improve natural flow

Where AI Detectors Still Struggle

Even with advancements, AI detector accuracy remains imperfect. Outbound studies from major research institutions highlight limitations:

- False positives continue when detectors are tested against creative writing, poetry, or technical reports.

- False negatives occur when AI text is highly polished and human-like.

- The balance between precision and recall remains difficult to achieve across all writing styles.

These findings suggest that no tool can guarantee 100% reliability, but some detectors are consistently better than others.

Best Practices for Students and Professionals

To avoid being caught in an AI detector mistake, follow these best practices:

- Diversify your vocabulary – Overuse of repetitive phrases makes writing seem machine-generated.

- Add personal context – Human experiences and insights are rarely mimicked by AI.

- Run multi-tool checks – Use a combination of plagiarism detection and grammar tools from KreativeSpace for more accurate results.

- Be transparent – If AI tools were used in research, acknowledge them instead of hiding.

Future of AI Detection

The future of AI detection tools lies in refining accuracy while reducing false positives. Developers are exploring:

- Machine learning models trained on broader datasets

- Hybrid systems that evaluate both style and context

- Adaptive models that evolve with new AI writing technologies

This evolution means fewer AI detector mistakes and better protection for genuine human writers.

The Decider

So, how often does an AI detector mistake people’s text as AI? Current estimates suggest anywhere between 5% and 20% of cases, depending on tool quality and text style. While errors can’t be completely avoided, platforms like KreativeSpace offer reliable tools to reduce risks. By combining AI detection with plagiarism checks, paraphrasing support, and humanization tools, users can maintain originality and protect their credibility.

For those seeking the most reliable workflow, using the KreativeSpace AI Detector alongside its suite of tools remains one of the best strategies to avoid unnecessary AI detection mistakes.