Introduction

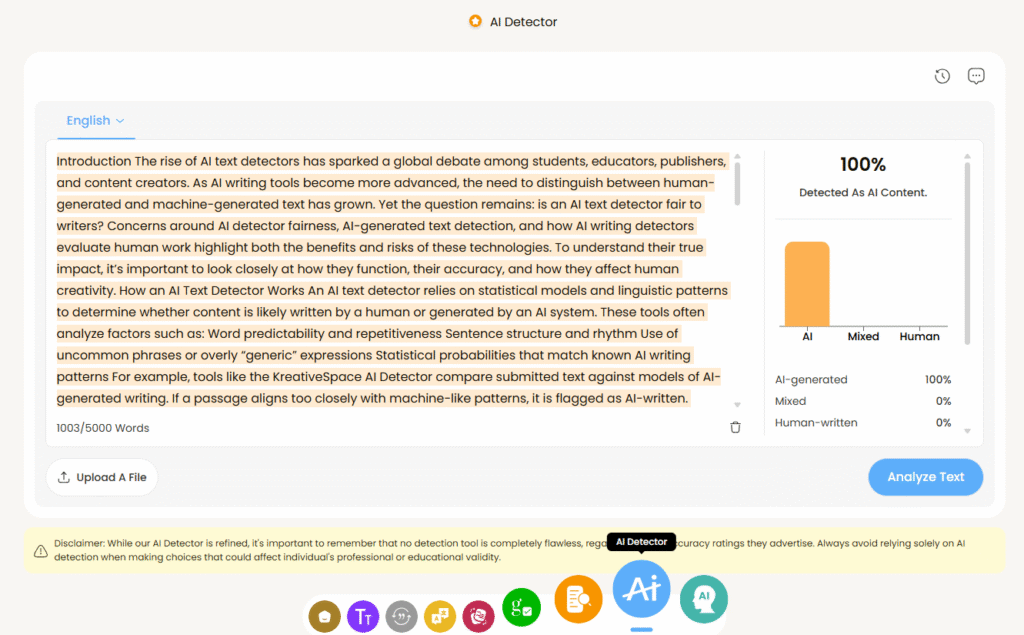

The rise of AI text detectors has sparked a global debate among students, educators, publishers, and content creators. As AI writing tools become more advanced, the need to distinguish between human-generated and machine-generated text has grown. Yet the question remains: is an AI text detector fair to writers? Concerns around AI detector fairness, AI-generated text detection, and how AI writing detectors evaluate human work highlight both the benefits and risks of these technologies. To understand their true impact, it’s important to look closely at how they function, their accuracy, and how they affect human creativity.

How an AI Text Detector Works

An AI text detector relies on statistical models and linguistic patterns to determine whether content is likely written by a human or generated by an AI system. These tools often analyze factors such as:

- Word predictability and repetitiveness

- Sentence structure and rhythm

- Use of uncommon phrases or overly “generic” expressions

- Statistical probabilities that match known AI writing patterns

For example, tools like the KreativeSpace AI Detector compare submitted text against models of AI-generated writing. If a passage aligns too closely with machine-like patterns, it is flagged as AI-written.

However, while the process sounds precise, even the best AI detection tools can misinterpret writing. A highly polished essay by a student might appear “too perfect” and be misclassified, leading to accusations of plagiarism or misconduct.

Is AI Detector Fairness Possible?

The fairness of an AI text detector is one of the most debated issues in modern academic and publishing circles. AI detector fairness depends on multiple factors:

- Accuracy rates – Detectors may claim high success rates, but even small percentages of error can unfairly impact writers.

- Bias in training data – If detectors are trained on limited datasets, they may misclassify writing styles that fall outside their model.

- Over-reliance on probability – A detector often provides a probability score, not a definitive judgment, but institutions sometimes treat results as absolute truth.

When detection tools are used to penalize rather than support writers, fairness is compromised. Many experts argue that AI detectors should serve as guides rather than judges, offering insights into writing patterns without labeling text as definitively human or AI.

AI Writing Detector and Human Creativity

One of the biggest criticisms of AI writing detectors is their effect on creativity. Writers who experiment with style, structure, or vocabulary may unknowingly trigger red flags. A student writing in a formal, concise tone could be unfairly classified as using AI because their style matches patterns often associated with machine outputs.

This issue raises questions about whether AI-generated text detection actually protects creativity or suppresses it. Writers may begin second-guessing themselves, adjusting their work to “sound less AI-like” rather than focusing on expressing ideas effectively.

To safeguard against this, many writers use human-focused editing tools like the KreativeSpace grammar checker or the KreativeSpace summarizer before submitting work. These tools refine writing without interfering with the author’s authentic voice, offering a balance between originality and clarity.

Challenges of AI-Generated Text Detection

While AI-generated text detection offers benefits in maintaining academic integrity, it faces several challenges:

- False positives – Writers are wrongly flagged as AI-assisted when their text is 100% original.

- False negatives – AI-generated content passes undetected, particularly when combined with paraphrasing or human editing.

- Rapid AI evolution – As writing models evolve, detectors struggle to keep pace, reducing their accuracy.

- Ethical concerns – Over-reliance on detection can create unfair reputational risks for students and professionals.

Educators, publishers, and institutions must understand that no AI detection tool is perfect. They should be used alongside human judgment, not as the sole authority.

When AI Detection Tools Mislabel Writers

One of the biggest risks with AI detection tools is mislabeling. For example, a university student might spend hours carefully researching and writing an essay, only to have it flagged as AI-generated due to high readability and formal tone. This misclassification can lead to accusations of dishonesty, academic penalties, or reputational harm.

To counter this, writers sometimes use the KreativeSpace AI Humanizer, which adjusts phrasing to align more closely with natural human writing. While this should not replace genuine work, it demonstrates how writers must adapt to the limitations of AI detectors.

Balancing Human vs AI Writing Detector Accuracy

Finding fairness in an AI text detector means balancing accuracy with responsibility. The best approach is combining human vs AI writing detector assessments. Instead of blindly trusting a machine score, institutions can:

- Use multiple detectors to compare results

- Allow writers to provide context or drafts to support their authenticity

- Recognize the limitations of AI detection tools and avoid treating them as absolute truth

When combined with manual review, detectors become a helpful aid rather than a threat to writers’ credibility.

Outbound Reference for better Understanding

Studies have shown both strengths and weaknesses in AI detection. For example, academic journals like Nature have published findings on the limitations of detection technology, emphasizing that fairness requires careful interpretation rather than automated judgment alone.

KreativeSpace and Fair Writing Support

For writers navigating this evolving landscape, platforms like KreativeSpace provide balance. Beyond offering an AI Detector, KreativeSpace has tools to strengthen authentic writing:

- Paraphraser – Restructure ideas while preserving meaning.

- Citation Generator – Ensure accurate academic references.

- Plagiarism Checker – Protect originality before submission.

- AI Humanizer – Help writers avoid unfair mislabeling.

By using these tools, writers can improve their work and minimize the risks of unfair detection.

The Decider

The fairness of an AI text detector is not absolute. While these tools help identify machine-generated writing, they also risk mislabeling genuine human authors. Issues of AI detector fairness, AI writing detector accuracy, and the limits of AI-generated text detection show that detectors must be used carefully. Writers should not be penalized solely based on algorithmic scores.

Instead, combining AI detection tools with human judgment and supportive platforms like KreativeSpace ensures that creativity, originality, and fairness are preserved. For writers, the best defense is not fear but preparation strengthening their skills with editing, grammar, summarizing, and citation tools that highlight the value of authentic work.